by Original Nifty-Oct 12, 2024

This application is unique in creating the conditions for an interaction whose essence is of a noticeably superior quality., despite (and partly because of) the fact that the model is running completely locally! While at the time of writing the UI is in the early stages of development, it is still workable, easy and stable, allowing the interaction with the model to continue over an extended period. The developers take a distinct and very refreshing ethical position, and are most generous. I am very grateful to them.

Version 1.8.9|United Kingdom

by SteadyBell41031-Oct 7, 2024

Great app, LLMs run really fast on iPhone 16 Pro! I would love some work on the UI, and possibly a download all models button. It would also be fantastic if some larger models were added even though they would be slow. 5 Stars!

Version 1.8.9|United States

by jadtduc-Oct 4, 2024

This app is great, I love that I can interact with LLMs locally and privately, and I'm quite honestly blown away that the devs of Private LLM have been able to make this work! My only suggestion is adding a bit more advanced customization options, like additional sampling parameters (Top K, Min P, Top A, Frequency Penalty, Repetition Penalty, Presence Penalty, Max Tokens, etc), and an option to add manually downloaded LLMs. Additionally, it would be amazing if there were some kind of memory system, similar to the "memory" feature on the ChatGPT app, to enable our LLMs to remember details across multiple conversations. All in all, this app is great for what it is. I'm thoroughly impressed!

Version 1.8.9|United States

by yillish-Sep 25, 2024

Overall this is great. Only private LLM manager I could find that runs on iOS (as a plus the macOS version is available through the App Store!). The UI isn’t the most polished, but that isn’t the end of the world.

Version 1.8.8|United States

Great LLM app!, could have even more potential

by oozeyZiy-Sep 24, 2024

This app is nice!, works well with my ipad 10th gen. It would be even better if the AI was able to run any code from any language and incorporate the codes output in its responses.

Version 1.8.8|United States

by bigelowdeuce-Sep 24, 2024

Still the best, performance is still great on older hardware very smooth and idiot proof

Version 1.8.8|United States

by TannerMo-Sep 3, 2024

Great app! Always interested in trying newer, more powerful models!

Version 1.8.6|United States

by stacker772-Aug 23, 2024

This app is superb, in terms of its immediate usefulness. It would be above and beyond if there were a history or separate chat type feature as all of the other apps have.

Version 1.8.6|United States

by Concreteo-Aug 7, 2024

Works out of the box. I like that it’s getting updated with llms that will work on your phone. I would recommend to follow the developers recommendation for which LLM to use. Ps phone does warm up and use battery, but that’s expected.

Version 1.8.6|Australia

by WhatsApp12345-Jul 25, 2024

This app is pretty fantastic! It runs almost smoothly on my phone and I can use it anywhere without needing the cloud. Some additional features that would be cool are being able to browse the internet, interact with documents, get connected with a keyboard for rephrasing or correcting grammar on my phone instead of just on a computer. Also, there could be some improvement in user experience - smoother animations would be awesome. Keep up the good work!

Version 1.8.5|United States

by Pawsative-Jul 7, 2024

Awesome app allowing you to choose which LLM’s you use for specific tasks. I think it’s pretty flawless the way it is great job on developing. I think it’s the best thing available today. Ultimate Wish list: functionality to simultaneously query multiple chosen large language models and aggregate their responses into a single answer by master LLM chosen.

Version 1.8.5|United States

Works Great On IPad Pro M4 13 Inch

by Trickfest-Jun 24, 2024

On least on the high-end iPad, performance is wonderful even on the largest models. Definitely worth $10 USD.

Version 1.8.5|United States

by Jacob.a.s.-Jun 24, 2024

This LLM app is what i was looking for. It is the reason I purchased an iPad M4. Defff worth the $10. Even the developer is active and available to chat with online, so reach out if you run into any issues, overall super great and cannot wait to see more updates!

Version 1.8.5|United States

Still the best offline AI...

by Joe Rollins-Jun 19, 2024

Still the best offline AI, most stable and has by far the most current datasets available; this is truly a beast of an app for offline use. I strongly recommend! 👍👍👍

Version 1.8.5|United States

by Tony the Vampire-Jun 2, 2024

Can’t believe my iPad is so powerful!! Works a charm on my M1. I download Phi3 no problem. You can also get it talk via clicking on text then speech. I then downloaded another model, which wasn’t show in list of installed models. I had to quit the app and go back into it to see the new models, then voila! [It may seem obvious but worth mentioning, some users may not quit the app, and quick to act in leaving negative feedback.] Can i make a request? Can you add the best model of Aya23 for translations?

Version 1.8.4|United Kingdom

by JSaaSi-May 15, 2024

Nice niche to run models on iOS. Allows also downloading of models from huggingface. Rather pay the 10eur, than get something with massive adware

Version 1.8.4|Finland

Remarkably good app, very active developer

by Conventional Dog-Apr 7, 2024

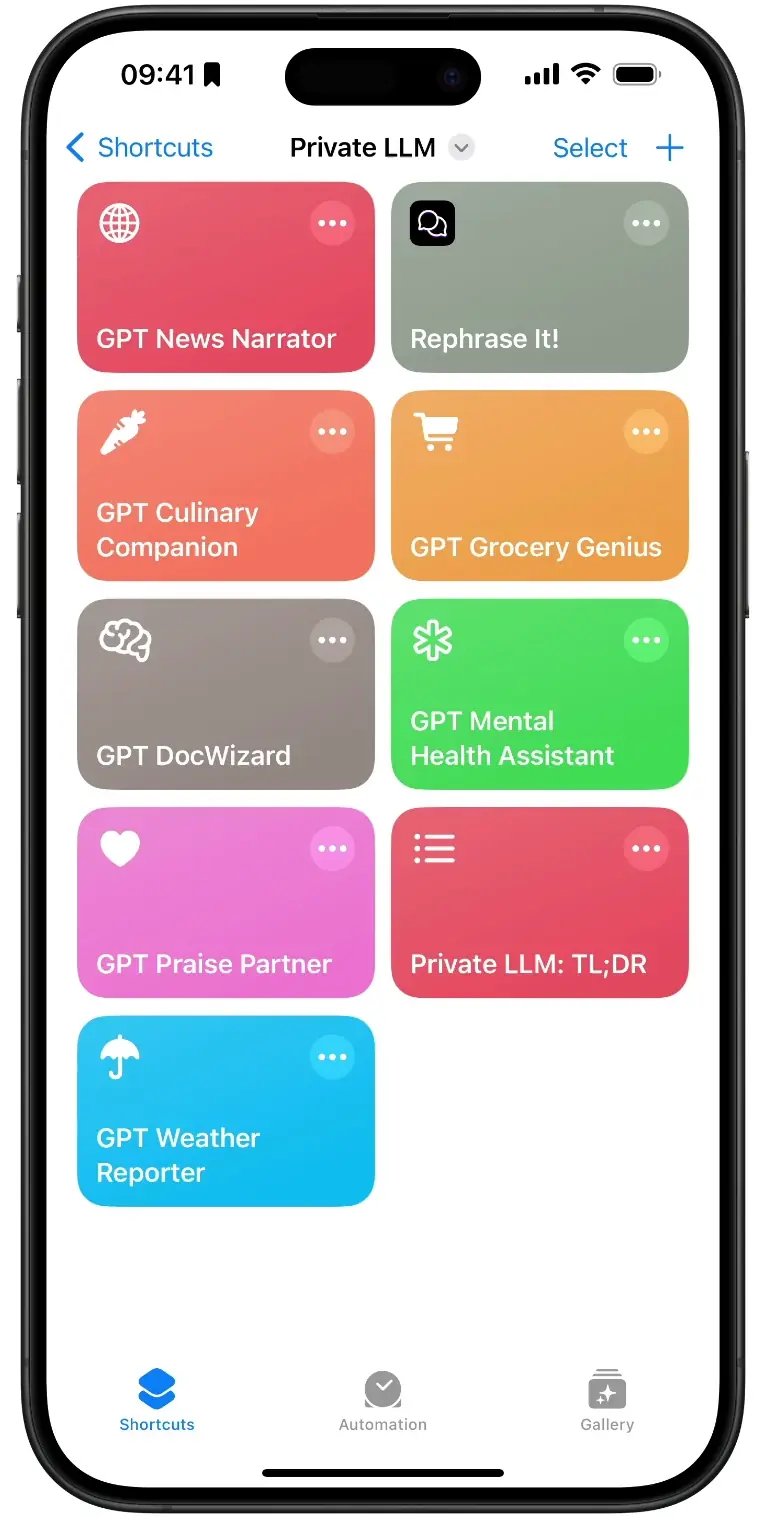

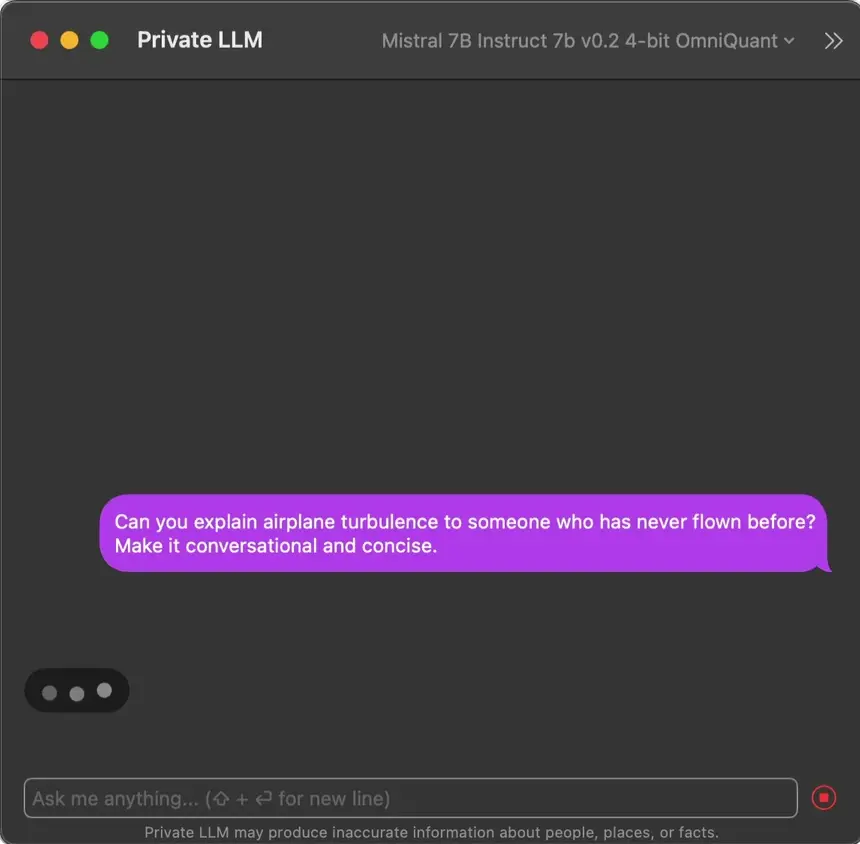

Possibly the single best app purchase I've ever made. The developer is constantly improving it and talking with users on Discord and elsewhere. One price includes Mac, iPhone, and iPad versions (with family sharing). Mac shortcuts can be used to create what amount to custom GPTs. (There's even a user-contributed, quite clever bedtime story generator on the website.) The 10.7B-parameter SOLAR LLM (one of many included) running on my 16 GB M1 MacBook Air gives me fast responses that are subjectively almost on the level of GPT-3.5. For something running completely locally with full privacy, it's remarkable. More RAM allows an even larger choice of language models. But the tiniest model running on my iPhone 12 Pro is usable. (Tip: Experiment with changing the system prompt to fine-tune it for your purposes.)

Version 1.8.3|United States

by Aerigot-Apr 30, 2024

This app is perfect. I have no problem paying 10 dollars for this app. It does exactly what i need it to do.

Version 1.8.4|United States

by armybob_ttv-Apr 12, 2024

In today's world of subscriptions and personal data living on devices that are not ours this is a refreshing app that I am happy to support. App has been updated since I originally took the plunge (week it came out, randomly found on /r/macapps) several times, is super responsive and best of all 1x fee with local privacy.

Version 1.8.3|United States

Gemma 2B just made this a killer app.

by UniqueAirStream-Feb 27, 2024

With the recent addition of Gemma 2B which is based of Google Gemni this has just become a killer app for me this has been the best LLM model that provides the best answer I hope to see 7B support in the future and I hope this comes Gemma comes to iPhone and iPad soon. Not only is ther the best performing model but it's all done on device which make this even better. Thank you!

Version 1.7.7|United States

by sabere-Feb 10, 2024

Great product. Really love that it manages LLM files for me, really helped during the flight work session! Thank you devs!

Version 1.7.0|Georgia

by WiredHeart86-Feb 14, 2024

After I saw the only review here on the App Store that’s nothing but gibberish, I had to write my own. I love that this app works offline and everything you put into it is private and kept on your device. Plus, there’s no money hungry subscription service. From what I’ve seen on reddit, the developer is working hard on improving the app and I’m looking forward to seeing what’s implemented in the coming updates. So far it’s been great for every day questions and information.

Version 1.6.7|Australia

One of the best apps out there!

by LinxCEO-Dec 24, 2023

I have always loved AI. I got into ChatGPT opening day and have used it religiously since day 1. That being said, it still requires internet access to use, and I’ve gone as far as debating creating my own AI to store locally on my iPhone just in case I’m ever in need of it when I have no internet. There are tons of use cases for this too. What if I’m on a home stranded and I need survival help? What if I’m on a plane that doesn’t have internet? I could go on and on. But I came across a YouTube video that shared this app and I figured, “eh, it’s only a couple bucks and I have storage to spare, why not?” I can safely say it’s one of the best investments I’ve made. Knowing I have a locally stored AI that doesn’t require internet access but still delivers most of what ChatGPT can, at the cost of a couple bucks and a couple gigabytes, is just awesome. I have even stress tested it, thinking maybe the lack of internet access would negatively impact the results, but nope! It works perfectly. There are a couple kinks here and there. But nothing that would make me reconsider downloading the app. For example: Sometimes it won’t remember things we just talked about, so I have to repeat the context. There are some formatting things where it’ll just give you massive blocks of text instead of easily digestible paragraphs. Sometimes it’ll start typing nonsense (but that’d be expected from any locally stored AI). And so on. But there’s no way these things would ever stop me from using it. This is what AI should’ve been from the beginning! A locally stored assistant that can help you with or without internet access. Trust me. It’s more than worth it.

Version 1.6.7|United States

by Lumalay-Nov 19, 2023

The developer is doing a wonderful job at updating the models the app uses, and it’s really noticeable.

Version 1.7.5|Canada

Good app with a privacy oriented focus

by ManicCoder-Nov 11, 2023

This is a very important app and concept in this day and age. Although as a suggestion in order to improve the AI users should be allowed to "opt in" to submitting data to better refine the model. Thank you for everything you do!

Version 1.6.4|United States

This is crazy-good, running on my *phone*!

by GeraldShudy-Oct 26, 2023

Nice for Shortcuts that need to “think” a little!!!

Version 1.6.2|United States

by Grdinic-Nov 22, 2023

Love the additional models, this is what I was hoping to see when I paid for an app. It's easy enough to find a free client, so this type of support is just what I hope to see continue!

Version 1.6|United States

Great tool. Looking forward to future features.

by Fuel Pump-Nov 22, 2023

What as easy way to work with LLMs. You can download multiple different models and you can share the application with your family. That's a deal that is hard to beat.

Version 1.6|United States

by Goodblokemouse-Nov 21, 2023

I'm astounded that I can run such capable models on my laptop. The developer is very active and constantly improving the app. It makes running local private models extremely easy and as of the latest update has a great range of different models to chose from. I have replaced a decent portion of my Open AI usage with this App

Version 1.6|Australia

by reader fanatic MarK-Nov 20, 2023

I run LLMs on Colab and other cloud providers. Private LLM let's me have fun running decent smaller models on my Mac and on my iPad Pro, Recommended!

Version 1.6|United States

Love Being able to run a powerfull LLM locally

by jfbloom22-Oct 9, 2023

I cannot believe it is as easy as a few clicks to install an LLM like ChatGPT and it is running entirely on my Mac! No internet required, no monthly fee for a plus version. awesome!

Version 1.5|United States

by N7nathan-Oct 2, 2023

I've been using this app for a couple of months at this point. When it was first released, it was a neat proof of concept, but it only supported 7B models and was overall just too simple to use effectively. With a recent update, a 13B model was released for all macs with 16GB of memory, and it makes such a huge difference! It's not quite at the same level as ChatGPT 3.5T, but it's close enough that I never use 3.5T anymore; this is my go-to. I greatly appreciate the on-device processing (hallelujah privacy!), and it doesn't even use too much power - my battery still lasts for hours and hours. The performance is also great; my base M1 Air powers right through the prompts. I only have two complaints about the app at this point. 1) The 13B model uses about 12GB of memory by itself, which does force the use of swap on a 16GB Air. Not much the dev can do about this, but it is something to keep in mind. You'll want to close out of other programs before launching this. 2) There still is no feature that has separate conversations. If you want to start a new conversation, you need to delete the existing conversation. I'd love it if we could get separate conversations in a future update; it would make this app so much easier to use. Overall, I love it and do not regret buying it at all. I can't wait to see what future updates bring :)

Version 1.4.1|United States

Incredible Offline AI, GPT 3.5 Comparable

by ramkus-Sep 13, 2023

This is incredible to have my own AI on my laptop. Very appreciate what developer does for this. Now I'm not rely on ChatGPT anymore, even this Wizard 13B model is smarter than free version of ChatGPT. Thank you so much. Amazing works!!!!

Version 1.3|Indonesia